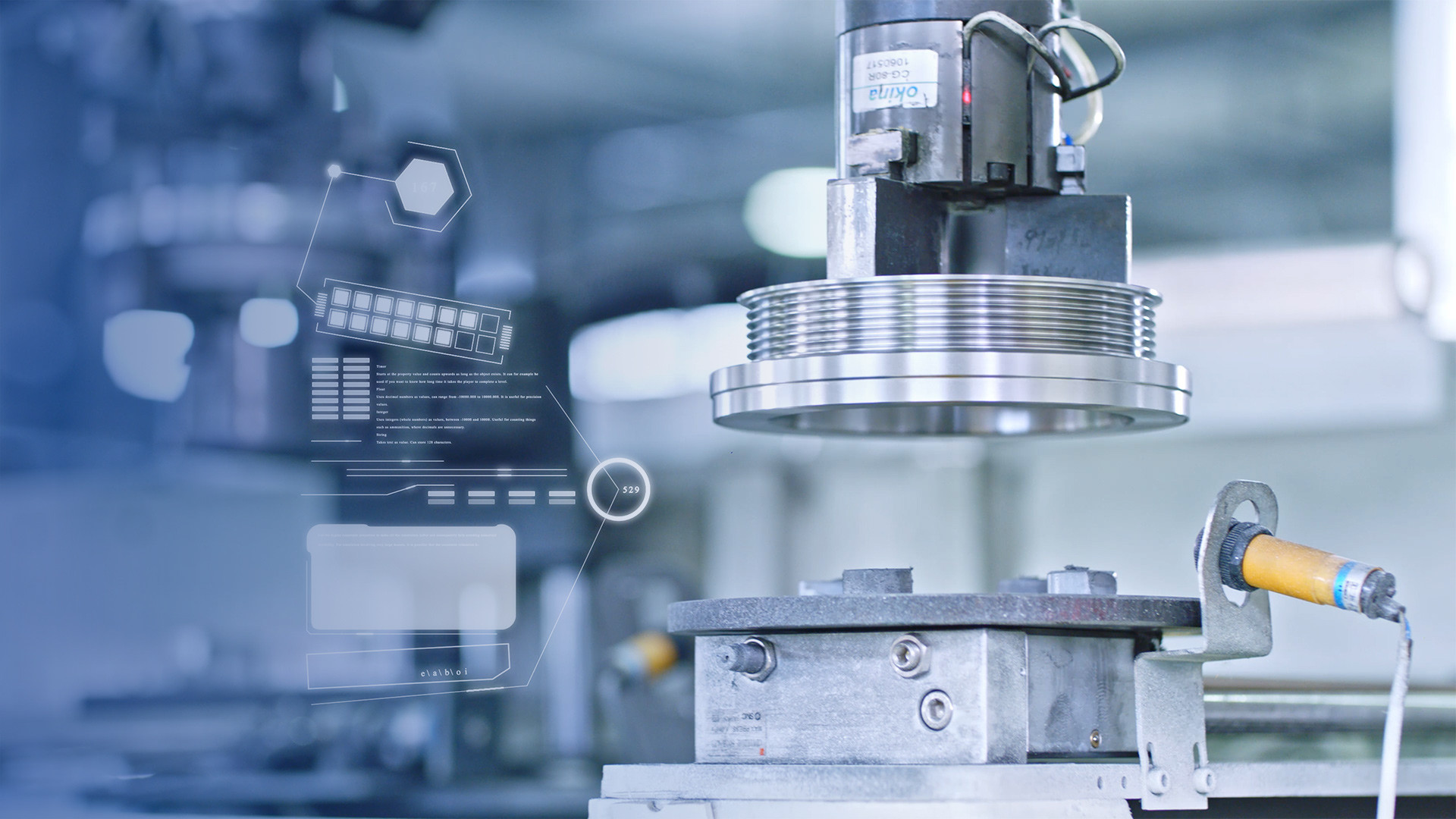

关于华体会在线登录入口

华体会在线登录入口成立于1998年9月,注册资本1.468亿元,下设三家全资子公司:山东阿诺达汽车零件制造有限公司、Dongli Deutschland GmbH(华体会在线登录入口德国)和Dongli USA Inc.(华体会在线登录入口美国)。公司于2022年6月6日登陆创业板(股票代码:301298),募集资金4.66亿元。

公司主营业务为汽车发动机与底盘减振系统类零件的铸造与精密加工。生产的零部件包括皮带轮、轮毂、惯性轮及飞轮环等,产品80%左右为出口,主要销往欧洲、北美洲、国内等地区。最终用户为奔驰、宝马、奥迪、大众、福特、通用、保时捷、沃尔沃、捷豹、标志、尼桑、现代、菲亚特等世界知名品牌;内销客户:长城汽车、青岛裕晋等。

1998

+

公司始建于

500

+

公司生产设备

800

+

产品种类

新闻资讯

华体会在线登录入口手机官网

华体会在线登录入口